|

4.1.2 Overview:

Search Algorithms Continuous speech recognition is both a pattern recognition and search problem. The decoding process of a speech recognizer finds the most probable sequence of words given the acoustic and language models. Recall our equation from Section 4.1.1 for finding the most probable word sequence. The complexity of search algorithms depends on many things, including the number and types of networks being searched. Speech recognition typically uses a hierarchical Viterbi beam search algorithm for decoding because of its speed and simplicity of design. Search is described extensively in this tutorial article:

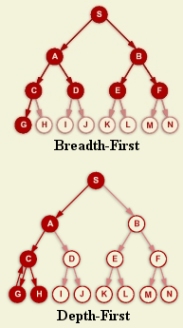

The search process is depicted in the figures shown to the right.

The most straightforward solution is to explore a single path until

its conclusion. If this path does not terminate on

a goal state, we backtrack and continue with another path. This

technique is similar to a maze. One might find a possible route, but

it may not be the shortest route.

Breadth-first search

explores all alternatives simultaneously level by level.

Best-first search

uses an evaluation function, h(N), which indicates the relative goodness of

pursuing that node. If we combine this with the partial path score,

we can define a general evaluation function:

The search process is depicted in the figures shown to the right.

The most straightforward solution is to explore a single path until

its conclusion. If this path does not terminate on

a goal state, we backtrack and continue with another path. This

technique is similar to a maze. One might find a possible route, but

it may not be the shortest route.

Breadth-first search

explores all alternatives simultaneously level by level.

Best-first search

uses an evaluation function, h(N), which indicates the relative goodness of

pursuing that node. If we combine this with the partial path score,

we can define a general evaluation function:

Viterbi beam search is a form of breadth-first search. The key in any search is the bookkeeping and use of efficient data structures. To maintain optimality in the search, we keep a history of predecessor words and states, since the same word sequence can be produced by multiple paths in the network. We terminate hypotheses that do not appear promising, based on some threshold, and free the memory space associated with storing this obsoleted information. When using search techniques, a process known as pruning is necessary. Pruning removes unlikely paths from consideration and saves resource usage in both memory and time. In Viterbi pruning, pruning takes place at the lowest level after evaluation of the statistical model. Paths with the same history can be compared - the best scorer is propagated and the other is deleted. Viterbi pruning must have an efficient storage scheme so that we only have to compare a small number of data elements to determine those that are comparable. Recognition systems use many forms of pruning. In challenging environments, such as conversational speech collected over noisy telephone lines, extremely aggressive pruning is required to avoid exceeding the physical memory capacities of your computer. In the next section, we will introduce you to many parameters available to control the behavior of the recognizer. Included in this discussion are several methods of pruning which are essential for developing efficient recognition systems. We also have an interactive demonstration of the search process that is an excellent visualization of the search process as it unfolds over time. Users can interact with recognition parameters and follow alternate paths representing word sequences as they evolve over time. Various levels of knowledge (e.g., phone hypotheses) in the recognition process can be viewed as well. |