4.1.1 Overview:

Bayes' Rule In this section, we describe how to use the recognition utility,

isip_recognize

to implement the search portion of the speech recognition problem,

and to produce the overall most probable transcription of the input

utterance. In order to decode the spoken words, certain search

algorithms are required to narrow the possibilities. Because the

complexity of an optimal or exhaustive search solution is

prohibitive for speech recognition, suboptimal search techniques

are vital to the decoding process.

In this section, we describe how to use the recognition utility,

isip_recognize

to implement the search portion of the speech recognition problem,

and to produce the overall most probable transcription of the input

utterance. In order to decode the spoken words, certain search

algorithms are required to narrow the possibilities. Because the

complexity of an optimal or exhaustive search solution is

prohibitive for speech recognition, suboptimal search techniques

are vital to the decoding process.

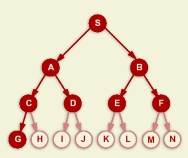

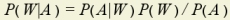

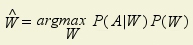

The primary search algorithm used in our software is a time-synchronous, Viterbi beam search. This is essentially a breadth-first search algorithm. Section 4.1.2 describes search algorithms in more detail. Note that the terms "decoding" and "recognition" are often used interchangeably. The search algorithm essentially integrates constraints imposed by the language model and probabilities computed in the acoustic models using a probabilistic framework based on Bayes' Rule:

Next, let's review the search process in a little more detail. |