|

Conversational speech tends to have a lot more variability than words

spoken in isolation. This variability involves various noises,

alternative pronunciations and unseen words. The important point to

note here is that in conversational speech, people generally use

pronunciations that deviate from their dictionary citations. A

majority of the state-of-the-art ASR systems do not model such

alternative pronunciations. Instead, these ASR systems make a

hard decision by assuming one pronunciation for each of the words.

In this tutorial, we introduce a new feature of the production

system, known as network training, that gives users

an ability to train pronunciation models directly.

In fact, any level in a user-defined hierarchy of grammars,

including language models, can be trained.

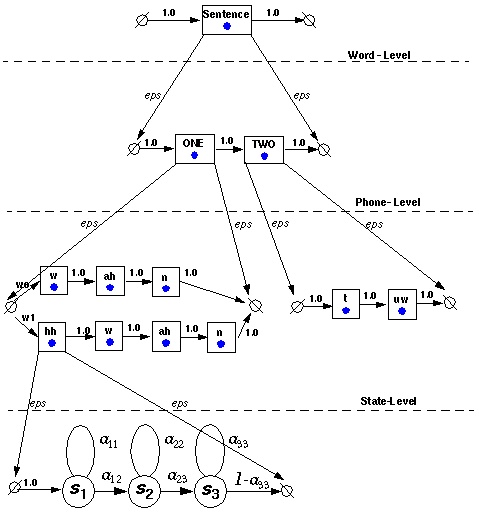

The network trainer implemented in the production system extends the concept of Baum-Welch (or Viterbi) training to any level of a user-defined hierarchy of networks, or grammars. Each of the levels in this hierarchy are represented as finite state machines using the Java Speech Grammar Format. Within the hierarchical network framework, each instance in the network is recursively expanded into a sub-network of instances. The system is capable of training this entire network, or selectively training any level in this network. Now, let us consider a simple example consisting of a three level system. These three levels correspond to: (1) word-level: the utterance transcription, (2) phone-level: a lexicon that expands the words into phones, and (3) state-level: acoustic models (HMM's) that expand the phone models into state sequences. The utterance in our example contains two words: "ONE TWO." In supervised training, we force the recognizer to align a sequence of acoustic models to this transcription. This transcription is converted to a highly constrained grammar that will form the highest level in the hierarchy of grammars used by the production system. Next, consider a single word lexicon with two pronunciations for the word "ONE":

ONE hh w ah n Let's begin by constructing the acoustic models using the JSGF format (state-level). Here is a typical three-state HMM model for the phone hh: #JSGF V1.0 // Define the grammar name // grammar network.grammar.hh; // Define the rules // public <hh> = <ISIP_JSGF_1_0_START> /0/ <S1> /0/ <S2> /0/ <S3> /0/ <ISIP_JSGF_1_0_TERM>; <S1> = /0/ S1+; <S2> = /0/ S2+; <S3> = /0/ S3+;Next, we need to define the lexicon (phone-level). This is done by processing the data above (pronunciations for the word "ONE") through a model creation utility called isip_model_creator. The resulting network is as follows:

grammar = {

#JSGF V1.0

// Define the grammar name

//

grammar network.grammar.ONE;

// Define the rules

//

public <ONE> = <ISIP_JSGF_1_0_START> ( ( /0/ w /0/ an /0/ n ) | ( /0/hh /0/ w /0/ ah /0/ n ) ) /0/ <ISIP_JSGF_1_0_TERM>;

};

The third level consists of the word-level, which essentially defines

the language model. This is generated dynamically during training,

and allows the user to insert optional silences between words or

at the ends of files, to account for arbitrary amounts of silence.

The network created in this process looks as follows:

These models are then trained using the main recognition system utility isip_recognize. This utility includes an HMM trainer that implements both the Baum-Welch training algorithm (preferred in this case) and the Viterbi algorithm. For a more extended tutorial that describes how to use the recognizer, see production system tutorials. |