|

At CAVS, we have one of the most powerful computing infrastructures

of any research center. We currently possess a 1000 node 1 GHz

cluster and a 300 node 3 GHz cluster. However such high computing power

is a waste if the the CPU utilization is low.

Currently, we use PBS to submit jobs to the cluster. It takes time

to get used to this system though, and each job requires a different

script. Hence, setting up the individual scripts becomes a

large task in itself. Such small things add to the overall

productivity. Here at the IES (Intelligent Electronic Systems) group at

CAVS, we are working on a tool

called 'isip_run' which automates the process of job submission to the

cluster. This tool will still use the default batch system, but it will

be transparent to the user. This can be thought of as a commandline

interface to the cluster. We are working at making this tool a general

purpose utility so that it can be used for various tasks other than

just speech jobs. For example we can run shell commands such as 'grep'

and 'find' in parallel.

The primary aim is to submit N jobs to M cpus. At ISIP, we were using a

similar tool, which was also called 'isip_run', but it was used only

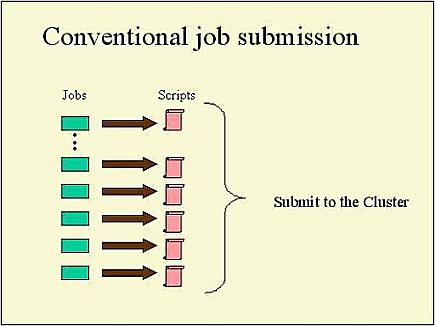

for parallel training on servers. The conventional method to submit

jobs can be described by the diagram shown above.

The diagram illustrates the fact that every job needs a script,

and to generate these scripts we have to either write another

perl/shell script or generate them manually.

The aim of 'isip_run' is to automate this process so that the user

doesn't have to worry about creating scripts for submitting jobs. The

commandline interface for isip_run is as follows:

isip_run -mode < cluster/server > -cpus < N number/server_names list file >

-command_to_execute < any command including shell commands > *.*

-batch_options < any batch system options other than the default ones >

The commandline will also accept a list of files instead of '*.*' and

run jobs on those files.

Now, script generation and submission will be transparent to the user,

since the user just needs to run the above commandline.

The jobs will be

equally distributed to different cpus based on the number specified by

the user. The screen will display the process id of the jobs along

with the cluster name.

Sword_[4]: isip_run.exe -mode cluster -cpus 8 -command_to_execute 'ls -l' *.*

100166.Empire

100167.Empire

100168.Empire

100169.Empire

100170.Empire

100171.Empire

100172.Empire

100173.Empire

Sword_[4]:

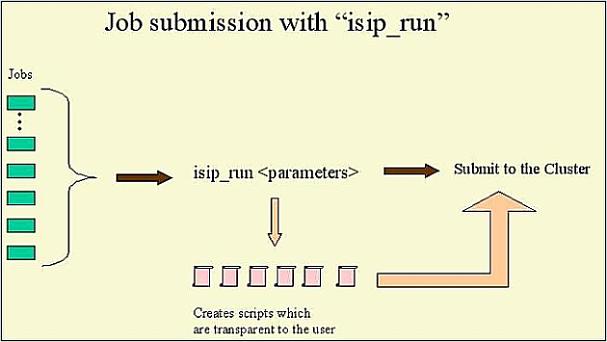

The job submission process using 'isip_run' can be represented by the

following diagram:

It can easily be seen that the user does not have to worry about

splitting the jobs and setting up the scripts. Everything is taken

care of by 'isip_run'. This utility will be a part of our upcomming

release. If you do not currently have ISIP's software installed, you

may find detailed instructions on how to download and install our

software on your system

here.

|