Last month's tutorial

presented an introduction to the control flow of our hybrid

approaches to application of support vector machines (SVMs) and

relevance vector machines (RVM) to continuous speech recognition

system. This tutorial is second in a series of three tutorials

that explains how to train and evaluate a HMM/SVM hybrid system.

In the last tutorial, we will provide a similar tutorial for the

RVM system. Both of these systems will be released with version

r00_n12 of our

production system.

You can monitor the progress of this release on our

asr mailing list.

This tutorial provides steps on how to run our hybrid system

approach to implementing risk minimization-based approaches such

as SVMs. The theory behind this implementation is covered in the

dissertation:

The next tutorial will provide steps on how to run our hybrid

system approach to implementing risk minimization-based

approaches such as RVMs. The theory behind the RVM system

implementation is covered in the dissertation:

A number of conference publications and seminars are also

available on our

publications web site.

In

last month's tutorial,

we described our two-pass hybrid system approach that

essentially uses a HMM system to generate the N-best lists and

alignments, and a SVM (or RVM) system to rescore these

results. This tutorial explains the command lines for various

utilities required to build this SVM system.

HMM/SVM Hybrid System:

In this section, we will cover the process of intergration of

the HMM output to the SVM based hybrid system. As discussed in

the

first tutorial

in the series of three tutorials, the two basic steps to build

the SVM-based hybrid system are: SVM training, and SVM-based

N-best list re-scoring. In this tutorial, we describe the

command line interfaces of the utilities required for these two

steps.

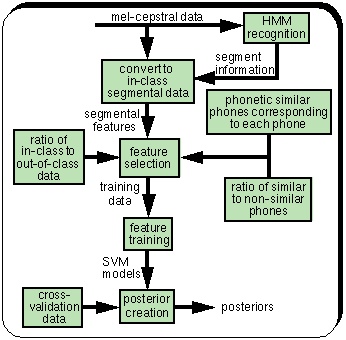

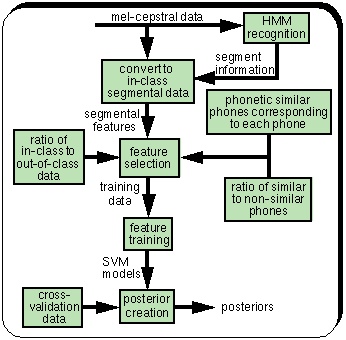

- SVM Training:

In the

first tutorial

that presented the overview of SVM/RVM hybrid systems, we

learned that training the support vector models (SVMs)

consists of a sequence of four steps: Segmental Feature

Generation, Segmental Feature Selection, Core SVM

Training, and Posterior Creation. For simplicity, lets

assume that we are training SVMs corresponding to each

monophone contained in the phone-set of the database

under consideration. As shown in the figure below, the

output at the end of these sequence of four steps is a

set of posteriors corresponding to each of the

monophones.

i. Segmental Feature Generation on Symbol (Phone)

Basis:

The first step in SVM Training is to extract the

segmental features from the normalized 39-dimensional

mel-cepstral (MFCC) training data using the

corresponding segmental information

(symbol-alignments). The concatenated segmental

feature vectors representing the in-class data for a

specific symbol (monophones in our case) can be

extracted using the isip_segment_concat

utility. First, we compute the minimum and maximum

value for each dimension of the input feature vectors:

isip_segment_concat -min_max \

-min_max_file min_max.sof \

-output_type text -list ident_sdb.sof \

-audiodb audiodb.sof -input_format sof \

-input_type binary -verbosity brief

Further details on isip_segment_concat

utility's interface can be accessed using the

following command:

isip_segment_concat -help

|

|

Next, we normalize the mel-cepstral features between

[1, -1] using the minimum and maximum value for each

dimension of the input feature vectors on the fly, and

extract the segmental features on symbol (phone)

basis. The feature-vectors corresponding to a typical

alignment is divided into three parts following a

heuristic ratio 3:4:3 which is specified using the

-ratio option. The feature-vectors in each of

these portions are averaged to form a single feature

vector. All the three averaged feature vector

corresponding to the three portions are then

concatenated. A log of length of this alignment is

appended to the output concatenated

feature-vector. The command line to extract the

segmental features in symbol basis is:

isip_segment_concat -duration -normalize -min_max_file min_max.sof -list ident_sdb.sof -audiodb audiodb.sof \

-input_format sof -input_type binary -symbol_list symbols_sdb.sof -dimension 39 -ratio "3:4:3" \

-transdb transdb.sof -level phone -output_type binary -output_format sof -suffix binary \

-extension sof -verbosity brief

ii. Segmental Features Selection:

In this step, the out-of-class data for each of the

symbols is selected from in-class data of rest of the

other symbols using the isip_feature_select

utility.

The training data for a symbol is constructed by

selecting equal amount of segmental features from

in-class data (data belonging to the same symbol),

and out-of-class data (data belonging to other

symbols) for that specific symbol by specifying the

-ratio_class option as "1:1". Further,

half of the out-of-class data is randomly selected

from the phonetically similar symbols (phone) set,

and the remaining half is randomly selected from rest

of the symbols (phones) by specifying the

-ratio_similar option as "1:1". The

command line to create the out-of-class data is:

isip_feature_select -audiodb database/inclass_db.sof -symbol aa -list lists/aa_similar_symbols_sdb.sof \

-symbol_list lists/all_symbols_sdb.sof -ratio_class "1:1" -ratio_similar "1:1" -suffix _out_of_class \

-input_format sof -input_type binary -verbose brief

This command line is repeated for creating

out-of-class data corresponding to each of the

symbols (phones). Next, we create an audio-database

of inclass-feature files and out-of-class features

files using the isip_make_db utility. Now, we

are ready to run the SVM training. Further details on

isip_feature_select, and isip_make_db

utility's interface can be accessed using the

following command:

isip_feature_select -help

isip_make_db -help

iii. Core SVM Training:

Next, as shown in figure to the right, given the

in-class and out-of-class data, Support Vector Models

corresponding to each symbol (phone) in the

symbol-set are trained using one vs. all training

scheme using the isip_svm_learn utility. The

output of the SVM training is the set of support

vectors corresponding to each of the symbols. To

train SVM for symbol "aa" using an RBF

kernel, with gamma value as 1.0, and

penalty as 10, run the following command line:

isip_svm_learn -par params_train_aa.sof -pos_examples identifiers_train_in_aa.sof \

-neg_examples identifiers_train_out_aa.sof -log_file logs/learn_aa.log -penalty 10 -gamma 1.0 -verbose brief

Similarly, we train SVM models for rest of the

symbols (phones) in the database. Further details on

isip_svm_learn utility's interface

can be accessed using the following command:

isip_svm_learn -help

iv. Posterior Creation:

Finally, for each of the SVMs trained in the previous

step, a posterior probability is estimated by fitting

a sigmoid to a set of SVM-distances. This set of

distances corresponding to each of the SVM is

computed by classifying a held-out cross-validation

set on the SVMs using the isip_svm_classify

utility. The command line to create the set of

positive and negative distances for the symbol

"aa" is:

isip_svm_classify -audio_database audio_db.sof -list identifiers_cross_valid.sof -model_file svm_model_aa.sof

-output_file hyp_aa.out -verbose brief -log_file classify_aa.log

Similarly, a set of distances are computed for each

of the symbols (phones). Next, we create a posterior

model for each of the symbols that converts the SVM

distances to a probability using the

isip_posterior_creator utility.

isip_posterior_creator -model_file svm_model_aa.sof -output_file sigmoid_fit_aa.sof \

-pos_examples pos_examples_list.sof -neg_examples neg_examples_list.sof

Next, we need to generate a statistical model pool

file that maps the states in the language model file

to probability models generated in the previous

step. The statistical model pool file is required by

the recognizer during the decoding process. The

statistical model pool file can be generated using

the isip_model_combiner utility.

isip_model_combiner -param model_combine.text

-output_file stat_model.sof -output_level 2 -type

binary -verbose verbose -brief

Now, we are ready for N-best re-scoring. Further

details on the interface of isip_svm_classify,

isip_posterior_creator, and

isip_model_combiner utilities can be accessed

using the following commands:

isip_svm_classify -help

isip_posterior_creator -help

isip_model_combiner -help

|

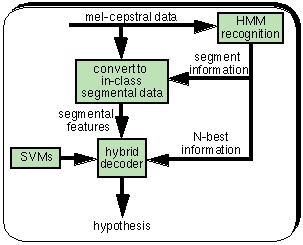

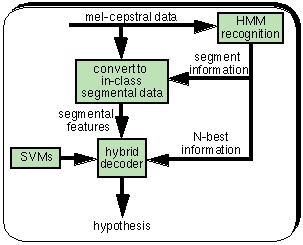

- SVM-based N-best List Re-scoring:

In this section, we will describe the process of

SVM-based N-best list re-scoring. The figure below gives

the overview of decoding process used in the HMM/SVM

hybrid system. First, the segmental features are

extracted from the mel-cepstral test data (MFCC) using

the segmental information from the baseline HMM

system. In the second step, the N-best list output from

the baseline HMM system is re-scored to get the final

hypotheses.

i. Segmental feature generation on an utterance basis:

Instead of generating segmental features on a symbol

basis, as done during the training process, the segmental

features are generated on an utterance basis using the

isip_segment_concat utility. The symbol alignments

corresponding to all the utterances in the test database

are generated using the baseline HMM system.

isip_segment_concat -duration -normalize \

-min_max_file min_max.sof -list ident_sdb.sof \

-audiodb audiodb.sof -input_format sof \

-input_type binary -ratio 3:4:3 \

-transdb transdb.sof -level phone \

-output_type binary -output_format sof \

-suffix text -extension sof -verbosity brief

ii. N-best re-scoring:

In the first pass of decoding, the N-best lists

corresponding to each of the utterance in the test

database are generated by the baseline HMM

system. This process is described in

N-best Generation of our online tutorial.

These N-best lists are then re-scored using

the SVM models (posteriors) in the second pass to get

the output hypotheses. This procedure is same as the

re-scoring process described in the

Word Graph Re-scoring.

|

|

In this tutorial, which is second in the series of three

tutorials, we introduced the command line interfaces of

the utilities needed to train and evaluate the HMM/SVM

hybrid system. In the last tutorial in this series, we

will introduce the command line interface of utilities

required to build a HMM/RVM hybrid system.

|