This tutorial is first in a sequence of three tutorials that

introduces you to the control flow of our hybrid approaches to

application of support vector machines (SVMs) and relevance

vector machines (RVM) to continuous speech recognition system.

Next month, I will explain in detail how to train and evaluate

an SVM system. In the following mont h, I will provide a similar

tutorial for the RVM system. Both of these systems will be

released with version r00_n12 of our

production system.

You can monitor the progress of this release on our

asr mailing list.

This tutorial provides an overview of the control flow for our

hybrid system approach to implementing risk minimization-based

approaches such as SVMs and RVMs. A detailed description of the

software interface will be covered in next month's tutorial.

The theory behind these implementations are covered in two

dissertations:

- A. Ganapathiraju,

Support Vector Machines for Speech Recognition,

Ph.D. Dissertation, Department of Electrical and Computer

Engineering, Mississippi State University, January 2002.

- J. Hamaker,

Sparse Bayesian Methods for Continuous Speech Recognition,

Ph.D. Dissertation Proposal, Department of Electrical and

Computer Engineering, Mississippi State University, March 2002.

A number of conference publications and seminars are also

available on our

publications web site.

Our hybrid system approach is a two-pass system that essentially

uses an HMM system to generate the N-best lists and alignments,

and an SVM (or RVM) system to rescore these results. These are

described below.

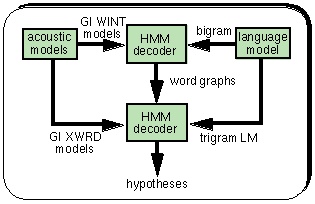

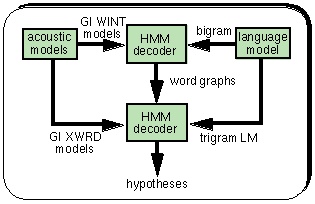

1. HMM System:

Our baseline HMM system is described in detail in our

speech recognition system tutorial.

A summary is shown in the figure to the right.

There are three three basic steps given below that are required to

build the HMM-based system:

feature extraction,

training, and

N-Best list generation.

Click on the links provided to learn more about these steps.

Below we will focus on how we integrate output from the HMM with

the SVM or RVM system.

2. HMM/SVM Hybrid System:

In this section, we will cover the process of intergration of

the HMM output to the SVM based hybrid system. The integration

of RVM based hybrid system to the HMM output is also based on a

similar model. The two basic steps to build the SVM-based hybrid

system are: SVM training, and SVM-based N-best list

rescoring. Both SVM training and SVM-based N-best list rescoring

require input(s) from the HMM system in one way or

another. These requirements are discussed in two sections given

below.

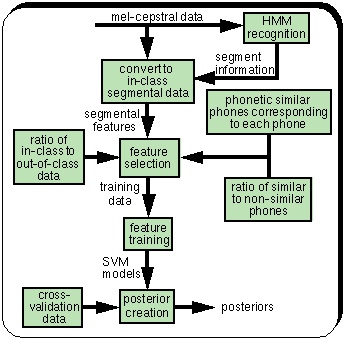

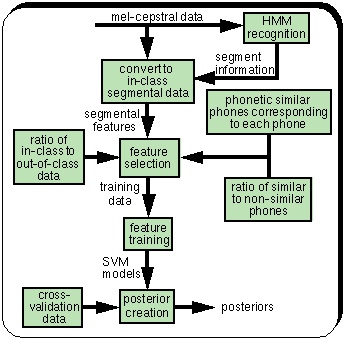

- SVM Training:

Training the support vector models (SVMs) consists of a

sequence of four steps: Segmental Feature Generation,

Segmental Feature Selection, Core SVM Training, and

Posterior Creation. For simplicity, lets assume that we

are training SVMs corresponding to each monophone

contained in the phone-set of the database under

consideration. The output at the end of these sequence of

four steps is a set of posteriors corresponding to each

of the monophones. A block diagram overview of the flow

of SVM training is provided in the figure shown below.

i. Segmental Feature Generation on Symbol (Phone)

Basis:

The first step in SVM Training is to extract the

segmental features from the mel-cepstral (MFCC) training

data using the corresponding segmental information

(symbol-alignments). The segmental information is

generated using the baseline HMM system. A typical

segmental feature vector is extracted from the

mel-cepstral feature vectors that corresponds to one of

the symbol alignments. The details of this process is

documented extensively in Aravind Ganapathiraju's

dissertation. The segmental feature vectors

corresponding to all the instances of alignment of a

specific symbol (phone) in the training data are

concatenated together. Thus, the concatenated segmental

feature vectors represents the in-class data for a

specific symbol. Following this procedure, in-class data

for all the symbols (monophones) is generated. Next,

out-of-class data is generated.

ii. Segmental Features Selection:

In order to train a SVM for a symbol, we require both

in-class data and out-of-class data corresponding to

that symbol. In this step, the out-of-class data for

each of the symbols is selected from in-class data of

rest of the other symbols.

The training data for a symbol is constructed by

selecting equal amount of segmental features from

in-class data (data belonging to the same symbol),

and out-of-class data (data belonging to other

symbols) for that specific symbol. Further, half of

the out-of-class data is randomly selected from the

phonetically similar symbols (phone) set, and the

remaining half is randomly selected from rest of the

symbols (phones). This process is illustrated as

feature selection in the figure shown to the

right.

iii. Core SVM Training:

Next, as shown in figure to the right, given the

in-class and out-of-class data, Support Vector Models

corresponding to each symbol (phone) in the

symbol-set are trained using one vs. all training

scheme. The output of the SVM training is the set of

support vectors corresponding to each of the symbols.

|

|

iv. Posterior Creation:

Finally, for each of the SVMs trained in the previous

step, a posterior probability is estimated by fitting

a sigmoid to a set of SVM-distances. This set of

distances corresponding to each of the SVM is

computed by classifying a cross-validation set on the

SVMs.

|

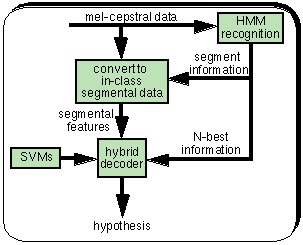

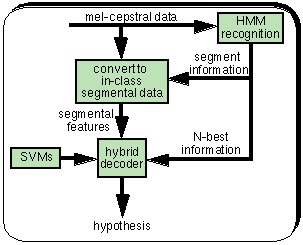

- SVM-based N-best List Rescoring:

In this section, we will describe the process of

SVM-based N-best list rescoring. The figure below gives

the overview of decoding process used in the HMM/SVM

hybrid system. First, the segmental features are

extracted from the mel-cepstral test data (MFCC) using

the segmental information from the baseline HMM

system. In the second step, the N-best list output from

the baseline HMM system is re-scored to get the final

hypotheses.

i. Segmental feature generation on an utterance basis:

Instead of generating segmental features on a symbol

basis, as done during the training process, the

segmental features are generated on an utterance

basis. First the symbol alignments corresponding to

all the utterances in the test database are generated

using the baseline HMM system. Then, the segmental

features for the utterances in the test database are

extracted using the corresponding segmental

information. Note that unlike SVM training, no

feature selection is required during the

decoding. Next, the process of decoding is performed.

ii. N-best rescoring:

In the first pass of decoding, the N-best lists

corresponding to each of the utterance in the test

database are generated by the baseline HMM

system. These N-best lists are then re-scored using

the SVM models (posteriors) in the second pass to get

the output hypotheses. The rescoring process is

documented extensively in Aravind Ganapathiraju's

dissertation.

|

|

The overview of the structure and implementation of

HMM/SVM and HMM/RVM systems presented in this tutorial

will guide the interface of these systems' implementation

in the ISIP Foundation Classes (IFCs). The next tutorial

will cover the details of this interface and will include

examples on how to train/decode using the SVM/HMM hybrid

system. A similar tutorial on RVM/HMM system will follow

later.

|