Why Deepfake Detection Matters

In an era of synthetic media, discerning truth from fiction is increasingly challenging

Misinformation

Deepfakes can spread false narratives, manipulate public opinion, and undermine trust in institutions. Research shows that exposure to deepfakes significantly increases political distrust.

Fraud Prevention

Synthetic identities and face-swapped images enable sophisticated financial and social-engineering scams. Women make up 96% of deepfake-based pornography victims, and 67% of image-based abuse victims report negative mental-health impacts.

Media Literacy

We empower users with tools to critically evaluate digital content and combat digital deception. Our detector helps protect vulnerable groups disproportionately affected by deepfakes and promotes responsible media consumption.

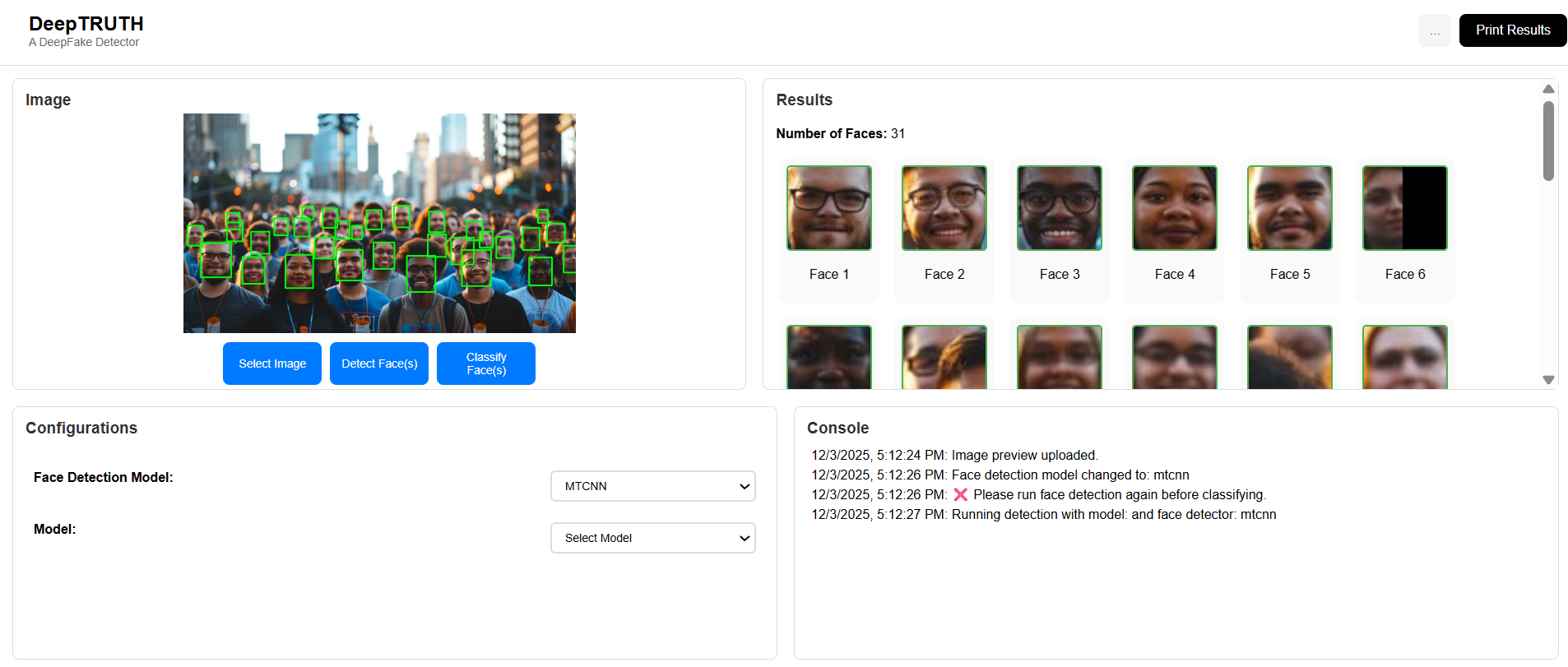

How Our Detector Works

A streamlined, multi-stage AI pipeline built for interpretability, speed, and real-world reliability.

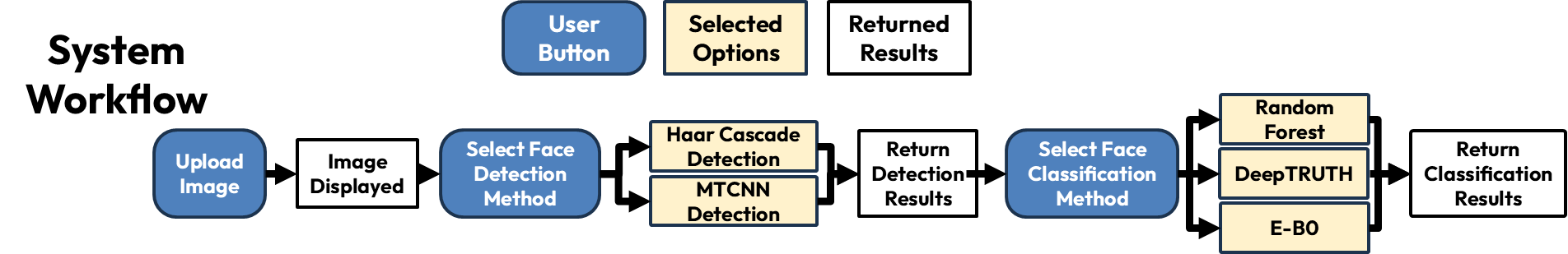

System Workflow

Complete pipeline from image upload to classification results

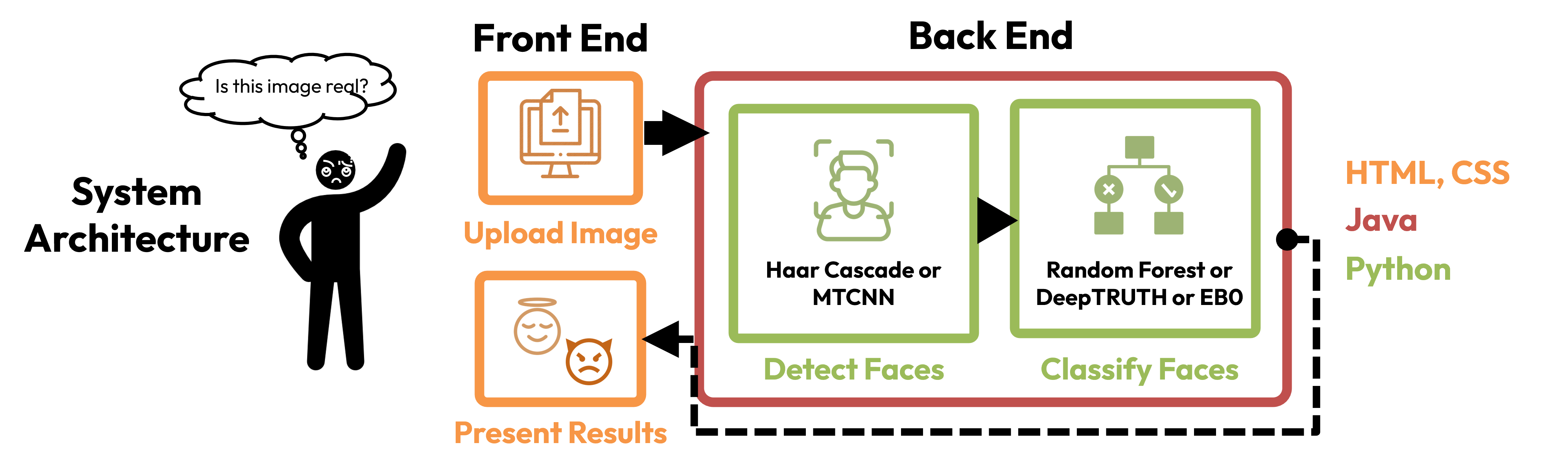

System Architecture

Front-end and back-end components working together for deepfake detection

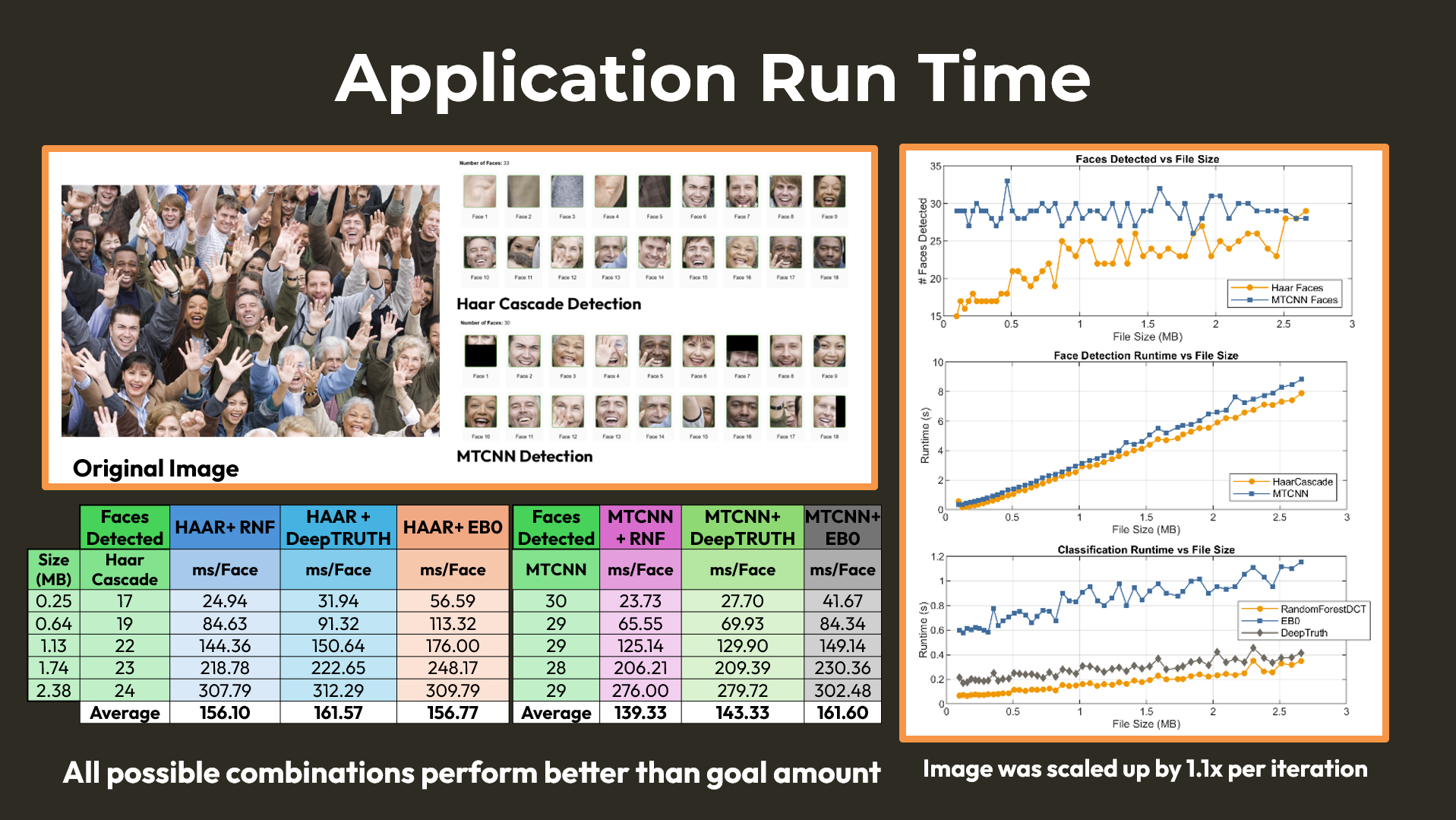

Face Detection

The system offers two face detection methods: Haar Cascade and MTCNN. Each detected face is cropped and analyzed individually, focusing on regions where manipulation typically occurs. The Haar Cascade provides fast CPU-based detection, while MTCNN offers higher accuracy for complex images.

Classification Models

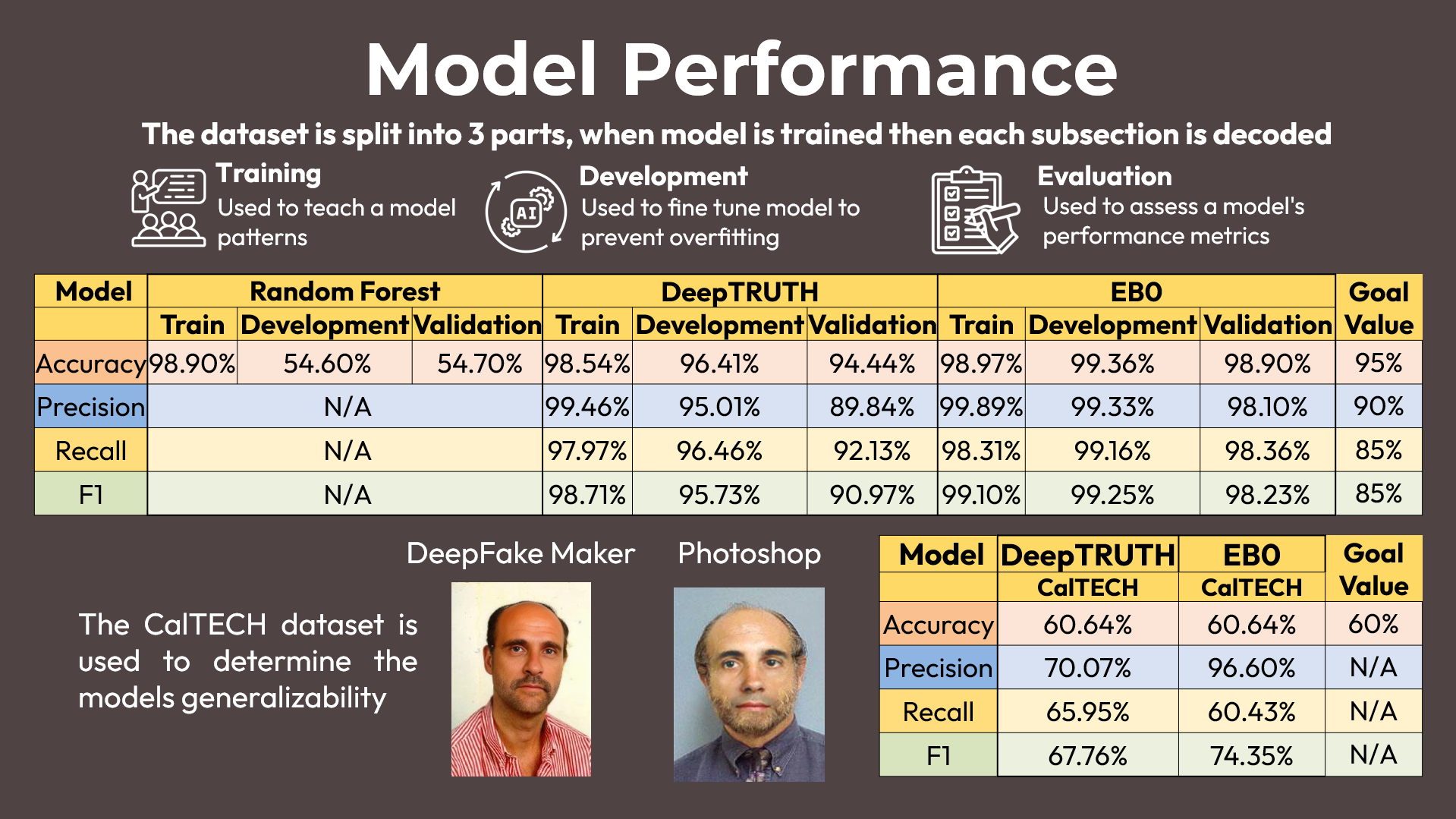

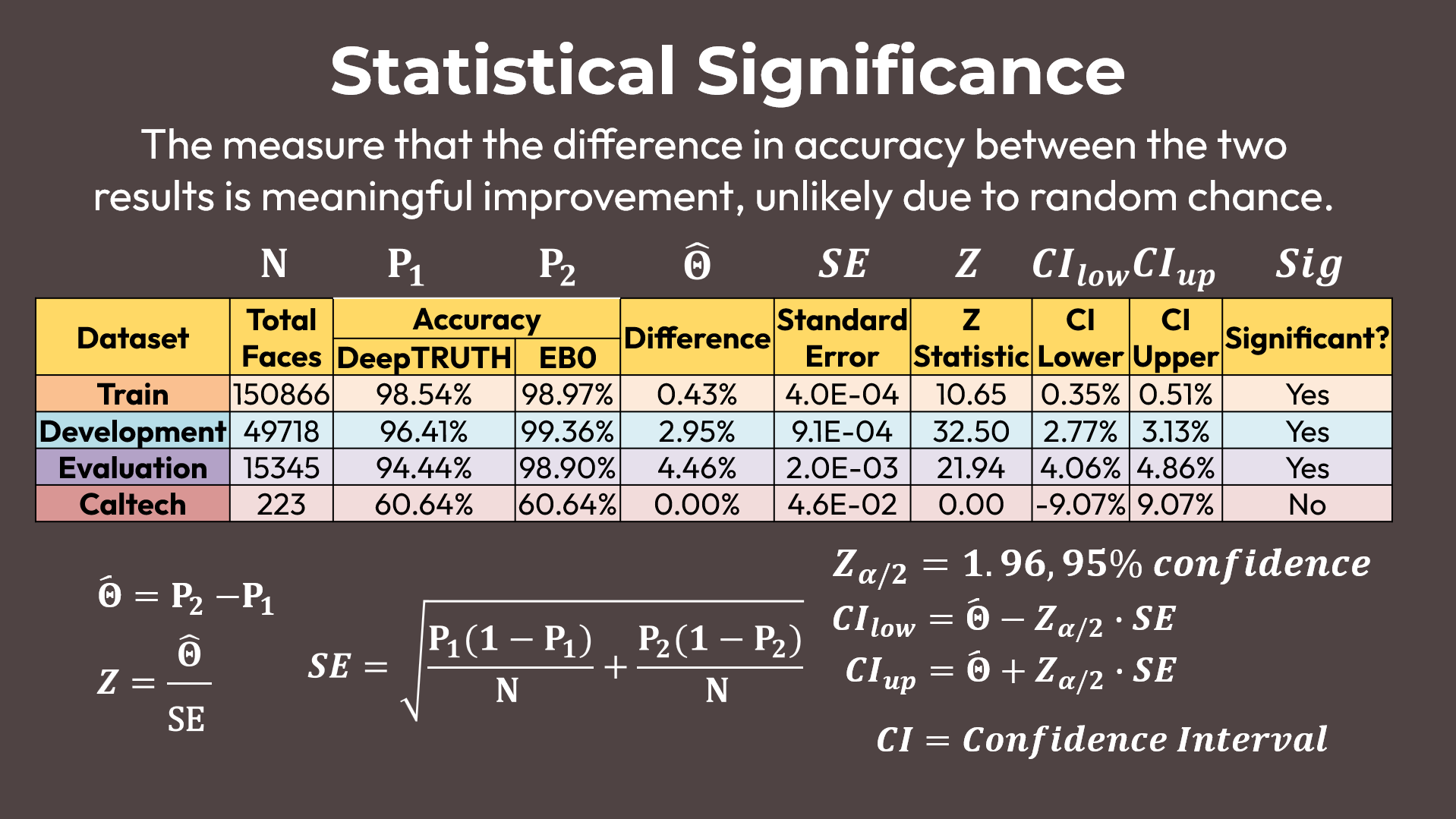

Three classification models are available: Random Forest with DCT features, our custom DeepTRUTH CNN, and EfficientNet B0. Our best model (EB0) achieves 98.90% accuracy on evaluation data, with 98.10% precision and 98.36% recall. Results include confidence scores and visual explanations.

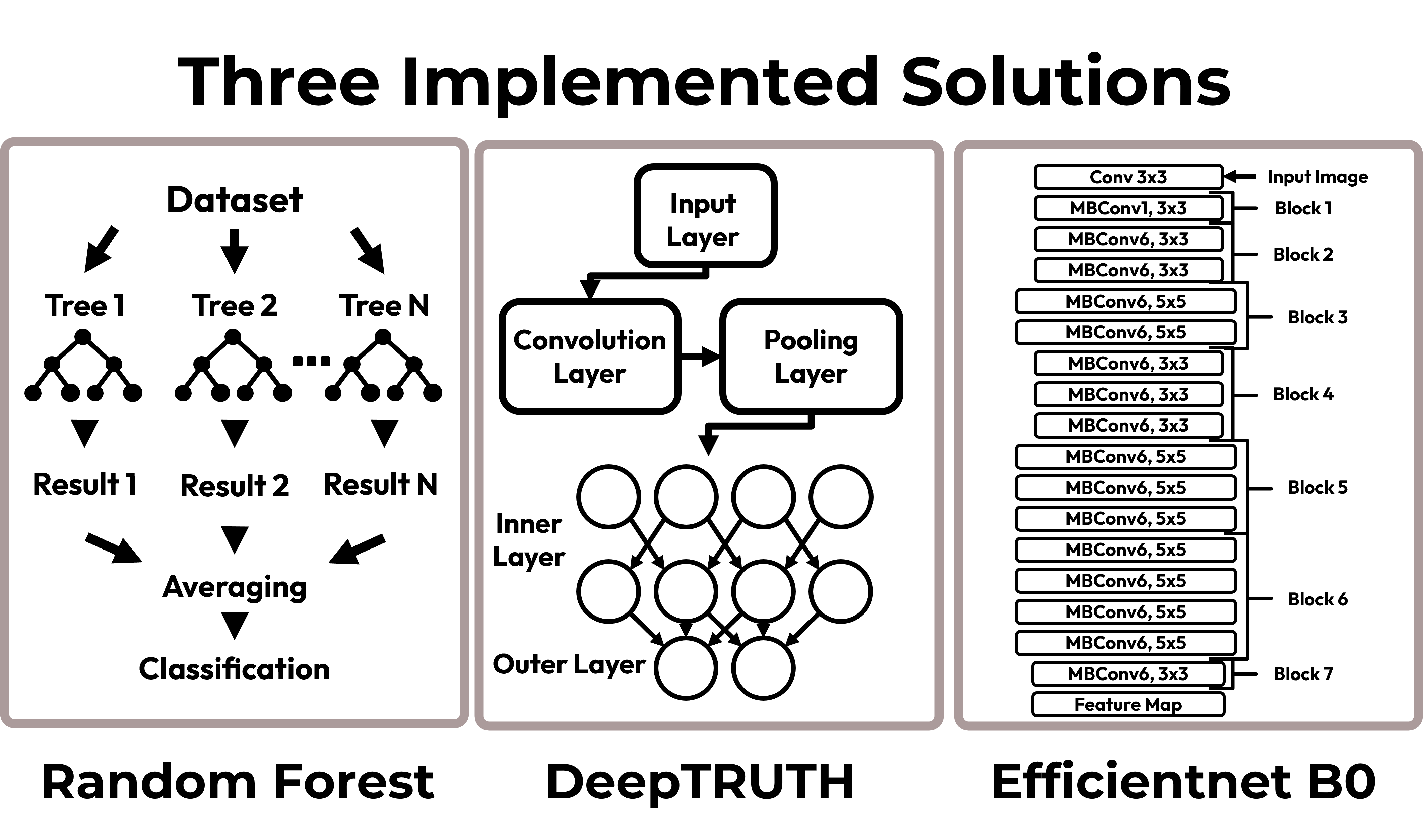

Model Architecture Details

Deep dive into the technical implementation of our three classification approaches

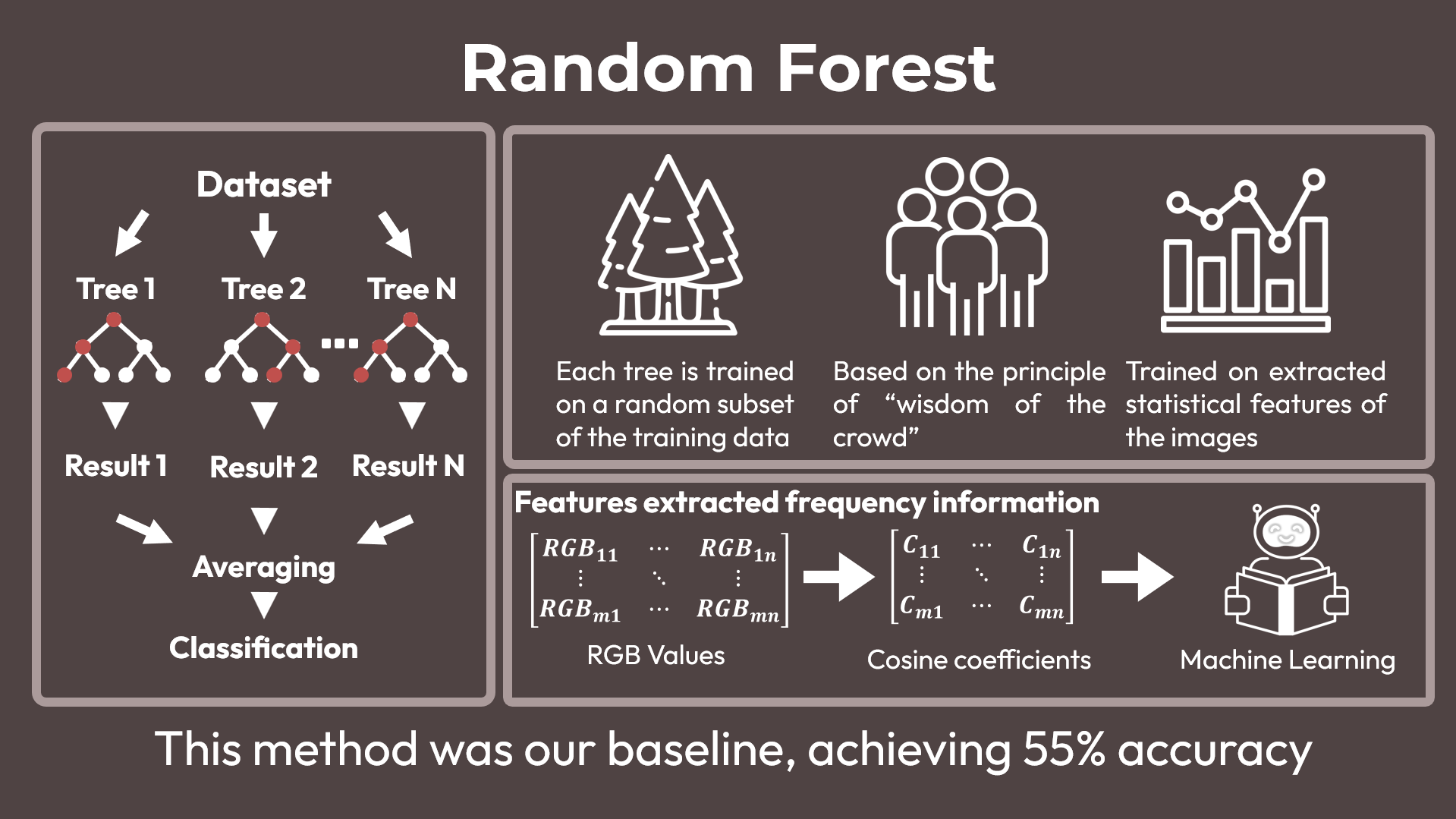

Random Forest

Ensemble learning method using DCT features and multiple decision trees. Fast baseline achieving 55% accuracy with efficient computational requirements.

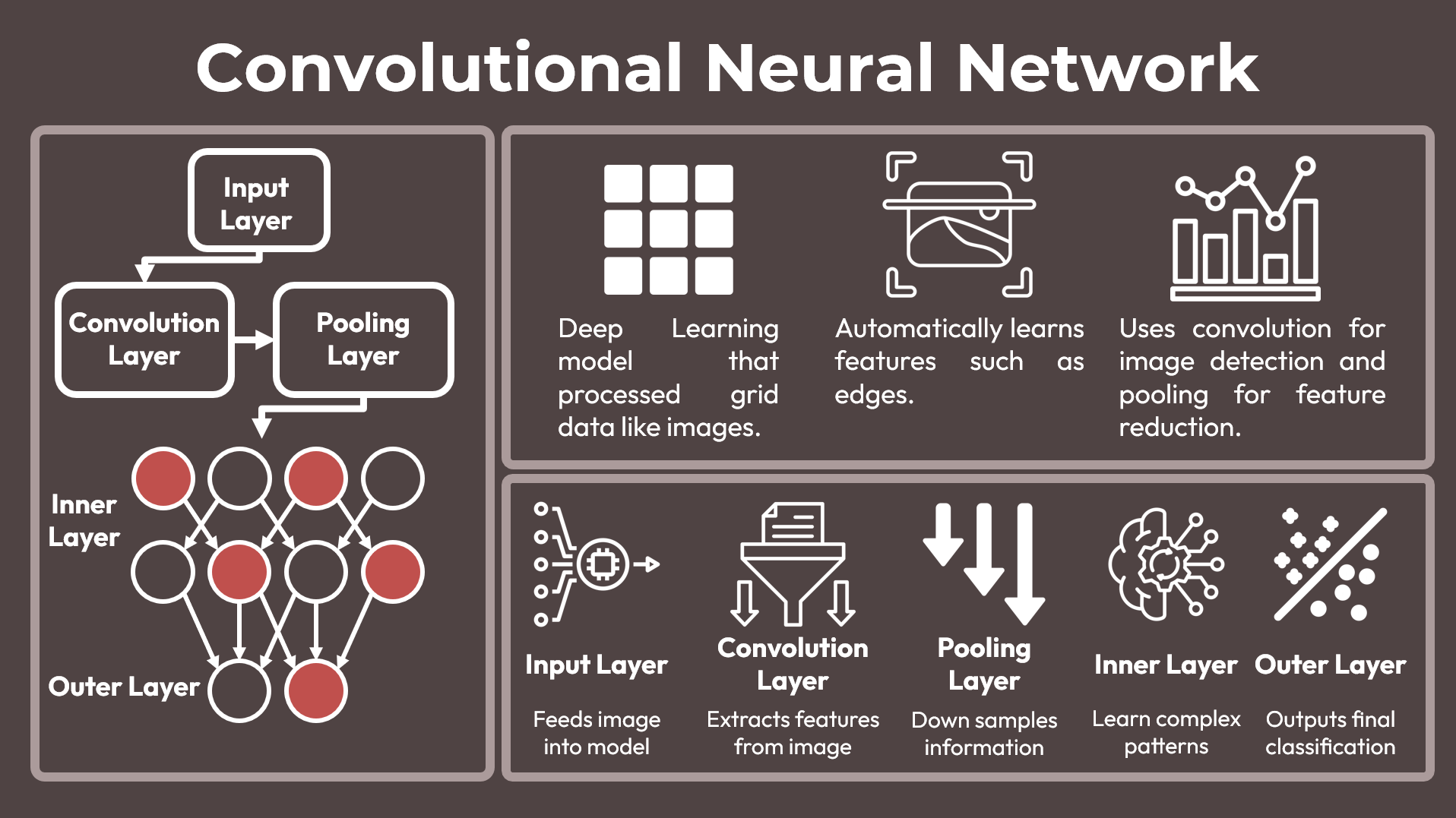

CNN DeepTRUTH

Our custom DeepTRUTH convolutional neural network with multiple layers for automatic feature extraction. Achieves 94.44% accuracy on evaluation data.

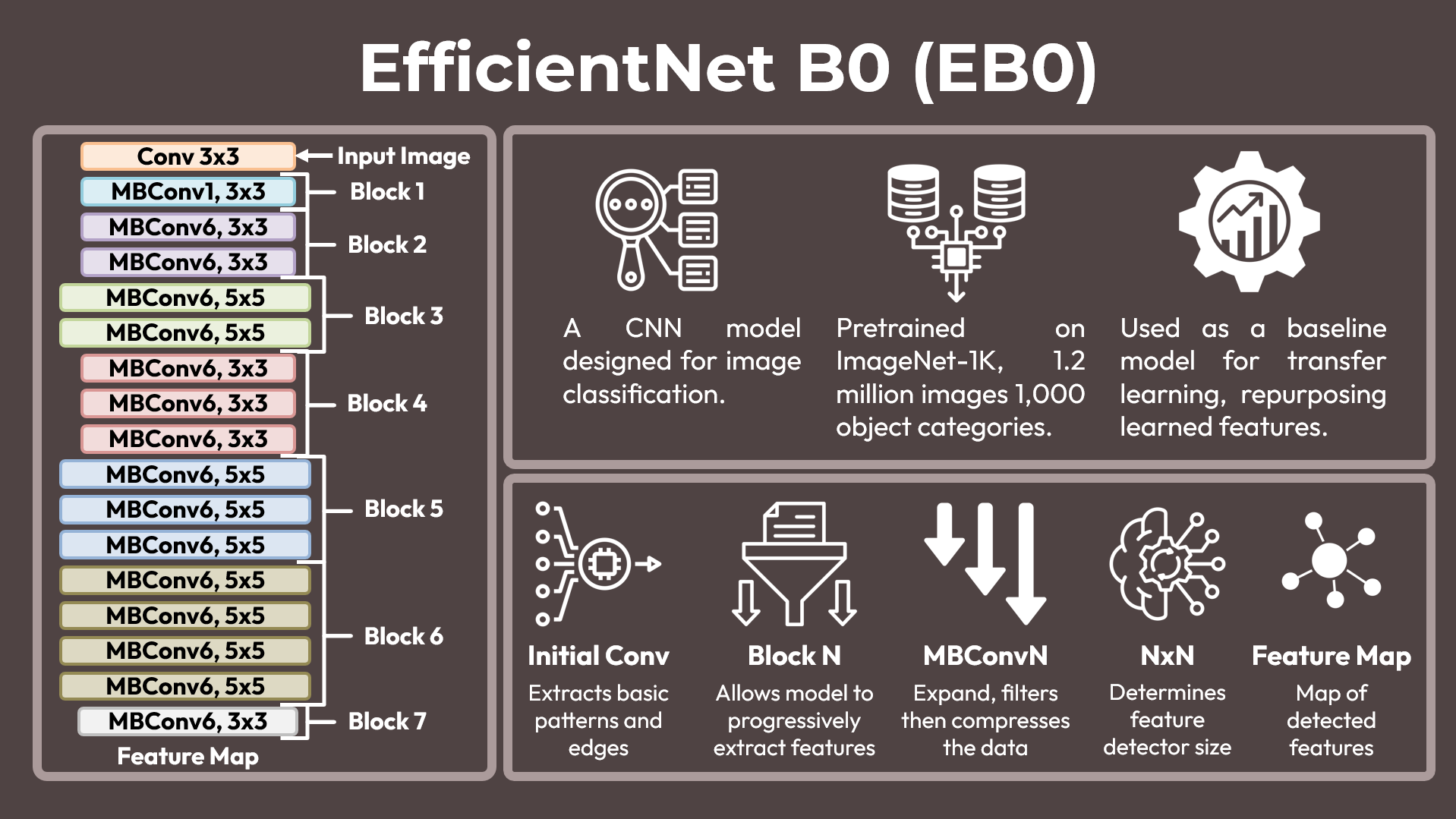

EfficientNet B0

State-of-the-art architecture using transfer learning from ImageNet. Our best performing model achieving 98.90% accuracy with optimal efficiency.

Performance & Results

Model Performance Comparison

Comprehensive evaluation across training, development, and validation datasets.

Application Runtime Analysis

Performance metrics showing detection and classification speed across different file sizes.

Statistical Significance Testing

Statistical validation confirming meaningful performance improvements between models.

Meet the Team

Electrical & Computer Engineering Seniors & Faculty Advisor

Jouri Ghazi

Team Lead / Machine Learning Engineer

Designed the deepfake detection pipeline including CNN architectures, training workflows, algorithm development, and full web integration.

Ashton Bryant

Data Research Analyst

Researched data sources, ensuring dataset quality. Developed an alternative deepfake dataset used for model generalizability.

Jahtega Djukpen

Data Engineer

Managed project datasets and developed the feature-extraction pipeline that generated standardized inputs essential for reliable model training.

Zacary Louis

Web Developer

Built the project website, creating an interface for showcasing results and deploying the deepfake detection model.

Faculty Advisor

Dr. Joseph Picone

Professor, Electrical & Computer Engineering

Our Commitment to Ethics & Privacy

Privacy First

All uploaded media is immediately deleted.

Transparent Accuracy

Our model achieves 98.90% accuracy on test datasets. Performance may vary on images outside our training distribution.

Responsible Use

This tool is for demonstration purposes only. We prohibit harassment, discrimination, or misuse.