CLASS N-GRAMS

- Recall we previously discussed defining

equivalence classes

for words that exhibit similar semantic and grammatical behavior.

- Class based language models have been shown to be effective for

reducing memory requirements for real-time speech applications,

and supporting rapid adaption of language models.

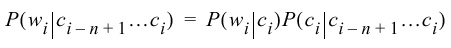

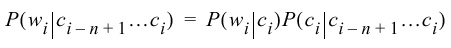

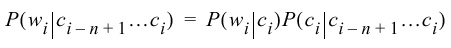

- A word probability can be conditioned on the previous N-1 word classes:

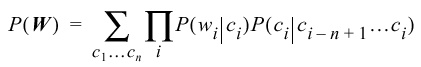

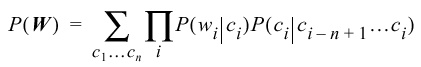

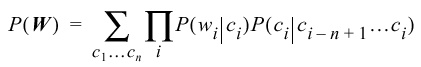

- We can express the probability of a word sequence in terms of

class N-grams:

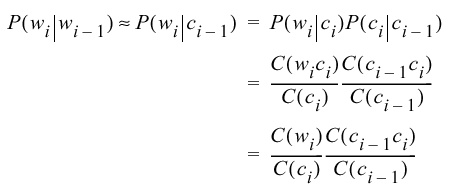

- If the classes are non-overlapping:

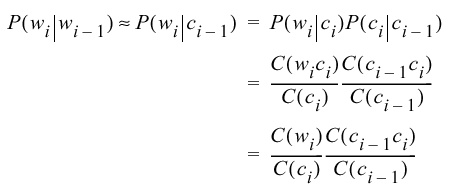

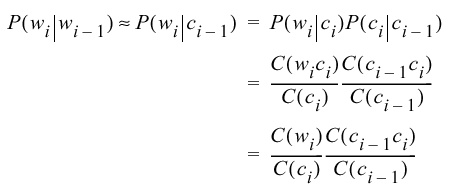

- If we consider the case of a bigram language model, we can derive

a simple estimate for a bigram probability in terms of

word and class counts:

- Class N-grams have not provided significant improvements in

performance, but have provided a simple means of integrating

linguistic knowledge and data-driven statistical knowledge.