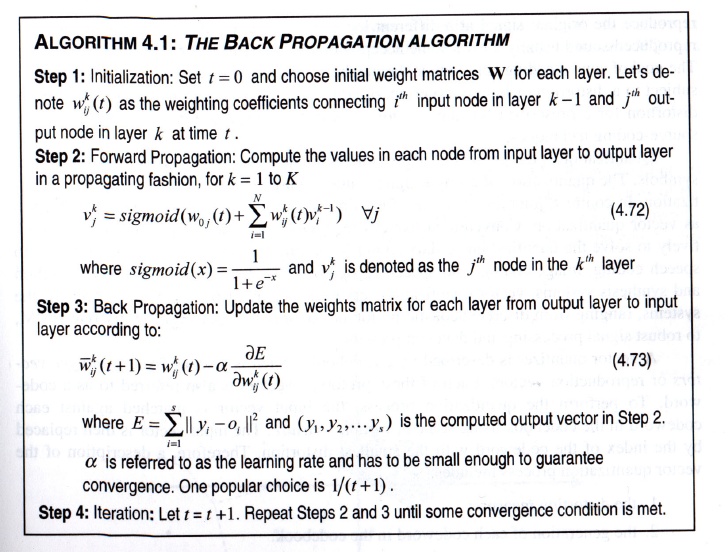

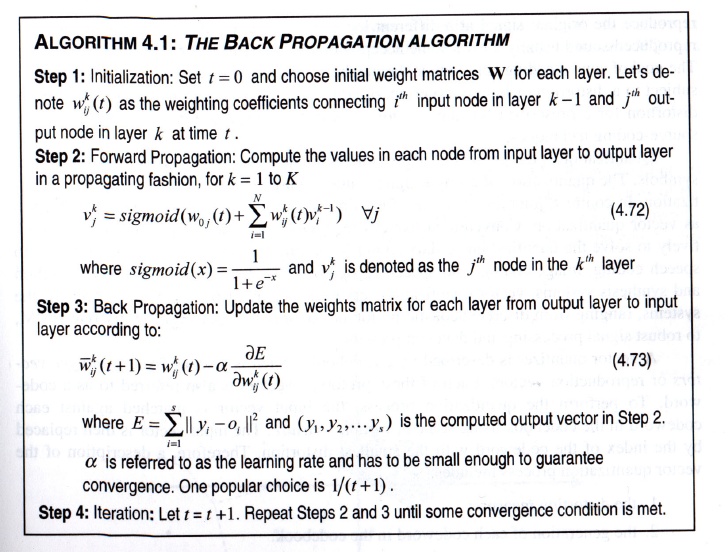

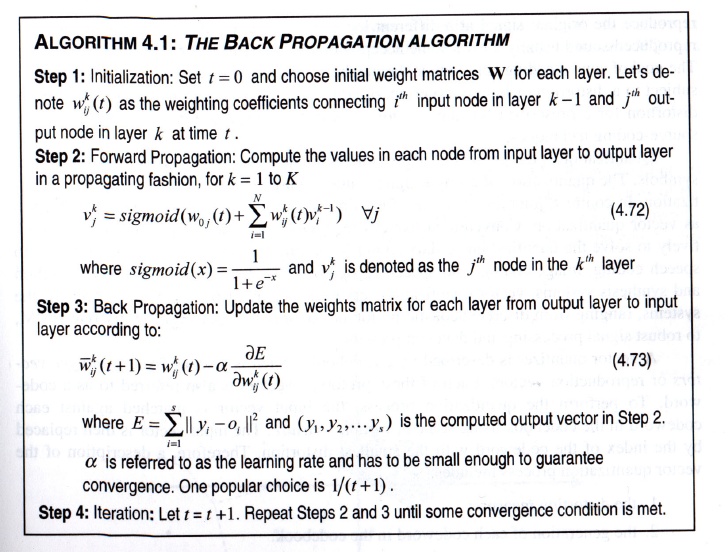

MLP TRAINING: BACK PROPAGATION

By incorporating a nonlinear transfer function that is

differentiable, we can derive an iterative gradient descent

training algorithm for a multi-layer perceptron (MLP). This

algorithm is known as back propagation:

The MLP network has been the most popular architecture for

speech processing applications due to the existence of

robust training algorithms and its powerful classification properties.