PRUNING A TREE IMPROVES GENERALIZATION

The most fundamental problem with decision trees is that

they "overfit" the data and hence do not provide good

generalization. A solution to this problem is to prune

the tree:

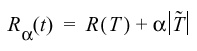

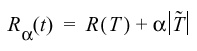

Cost-complexity pruning is a popular technique for pruning.

Cost-complexity can be defined as:

where

where

represents the number of terminal nodes in the subtree.

represents the number of terminal nodes in the subtree.

Each node in the tree can be classified in terms of its

impact on the cost-complexity if it were pruned.

Nodes are successively pruned until certain thresholds (heuristics)

are satisfied.

By pruning the nodes that are far too specific to the training set,

it is hoped the tree will have better generalization. In practice,

we use techniques such as cross-validation and held-out training

data to better calibrate the generalization properties.

represents the number of terminal nodes in the subtree.

represents the number of terminal nodes in the subtree.